A Simple WeChaty Bot with Intelligence Powered by TensorFlow

Background

WeChaty is an powerful library to help developers interact with WeChat programmatically, implementing a bot for a varity of purposes. A very significant purpose of a bot is to chat with users, implementing tons of businesses within only a message app instead of a website or other apps1 (Raval, 2016). A chatbot is extremely useful for businesses such as custom services. A traditional way to implement a chatbot is to write all possible responses. It would cost a large amount of time and money and seems to be ‘mission impossible’. With the growth of Artificial Intelligence, machines could learn from a large number of dialogues generated by human beings automatically so that programmers do not have to ’teach’ these machines step by step. In this article, I will introduce a few codes of TensorFlow to make our chatbots more ‘intelligent’.

A brief introduction of Artificial Intelligence

Artificial Intelligence (aka. AI) is a very hot topic at present time. A big news is that DeepMind’s AlphaGo won three matches against Ke Jie2 (Russel, 2017). The method to beat him is not magic. It is ’learning’ of machine from a very big data set. By doing a lot of training, machines could do better than human in some specific fields. Go is a very recent example. The chatbot is a similar topic. We have a lot of resources to train our chatbot, such as the scripts of TV and movies. As a result, the problem of intelligence could become that of big data. ‘Talk is cheap, show me the code.’ Let’s look a bit inside into it.

Model training

We are going to use the code written by Sir Raval to train our model: [https://github.com/llSourcell/tensorflow_chatbot . To get rid of difficulties of fetching dataset, it is highly recommended to use my repo with the dataset ready. In addition, the details of the usage for TensorFlow will not be included in this article. Because there are too many details to write about.

Dependencies

You must install the following dependencies:

-

Python 2.7

-

numpy (

pip install numpy) -

scipy (

pip install scipy) -

cuda and cudnn (optional, but highly recommended)

-

tensorflow (0.12), it would be much better if you have a GPU which supports cuda accelerating the traning process

-

The dependencies in

ui/requirements.txt:click==6.6 Flask==0.11.1 itsdangerous==0.24 Jinja2==2.8 MarkupSafe==0.23 Werkzeug==0.11.10

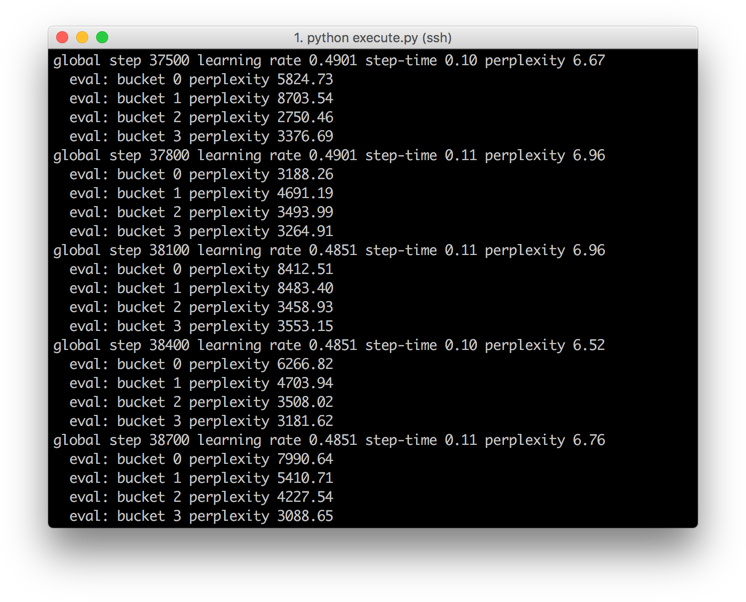

Train the model

In the project directory, execute python execute.py, starting the tranning process:

Note: The training process will take a lot of time, even if on good GPUs. In my case, I spent over 1 hour training this model to make the perplexity less than 10, on GTX 1080. The lower perplexity is, the better quality the chatbot is.

When you feel your model ready, just press Ctrl + C to terminate the training process.

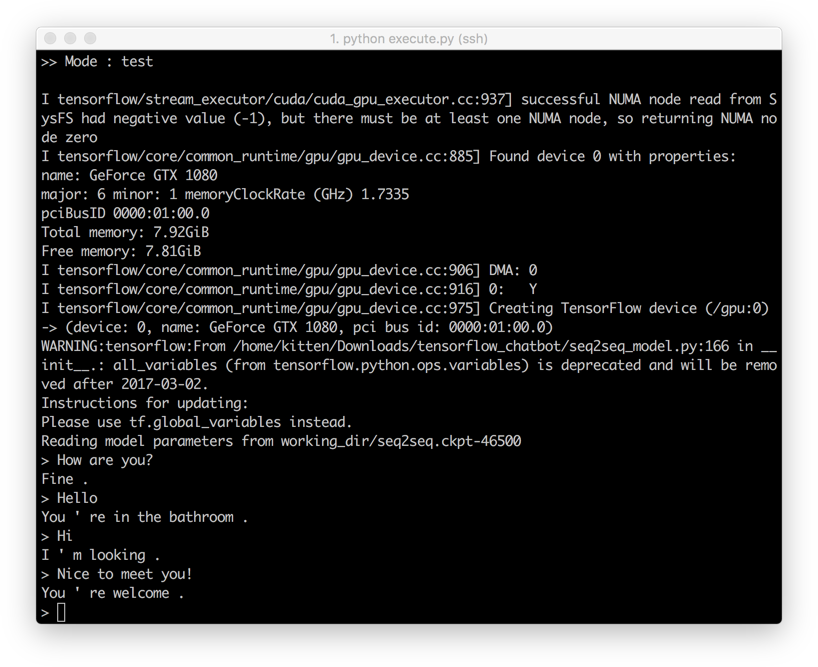

Test the model

Modify seq2seq_serve.ini, changing mode = train to mode = test. Then execute python execute.py again. The ’test’ UI will be presented:

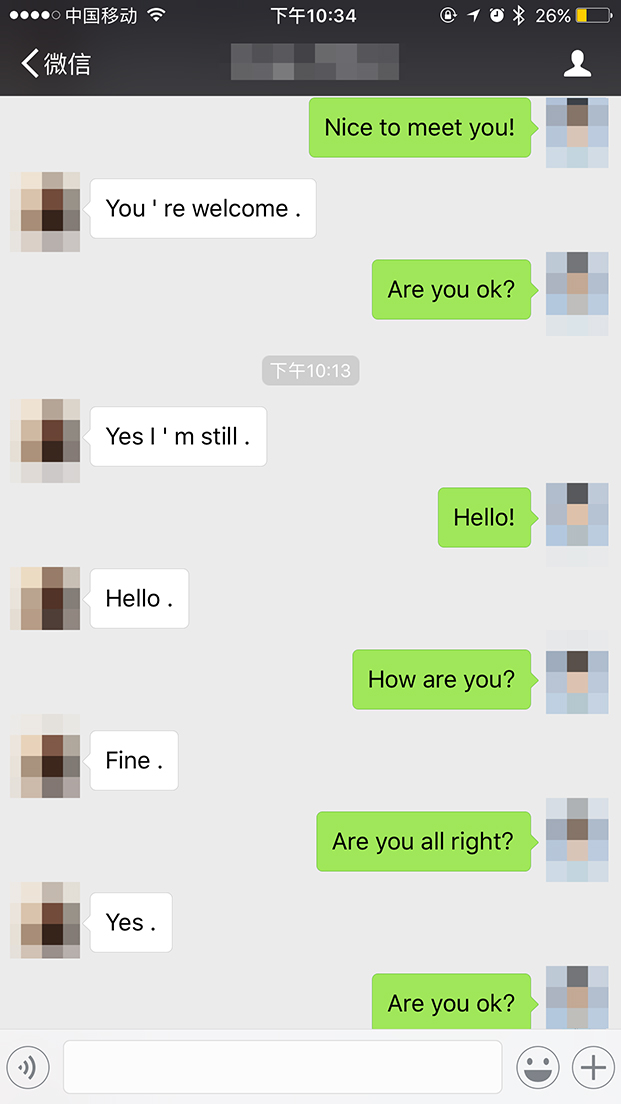

Well, it doesn’t look so smart. As mentioned before, the quality of AI is highly dependent on your dataset. Our dataset is Cornell Movie Dialogue dataset and we our neutral network is relatively simple. Consequently, our bot has less intelligence.

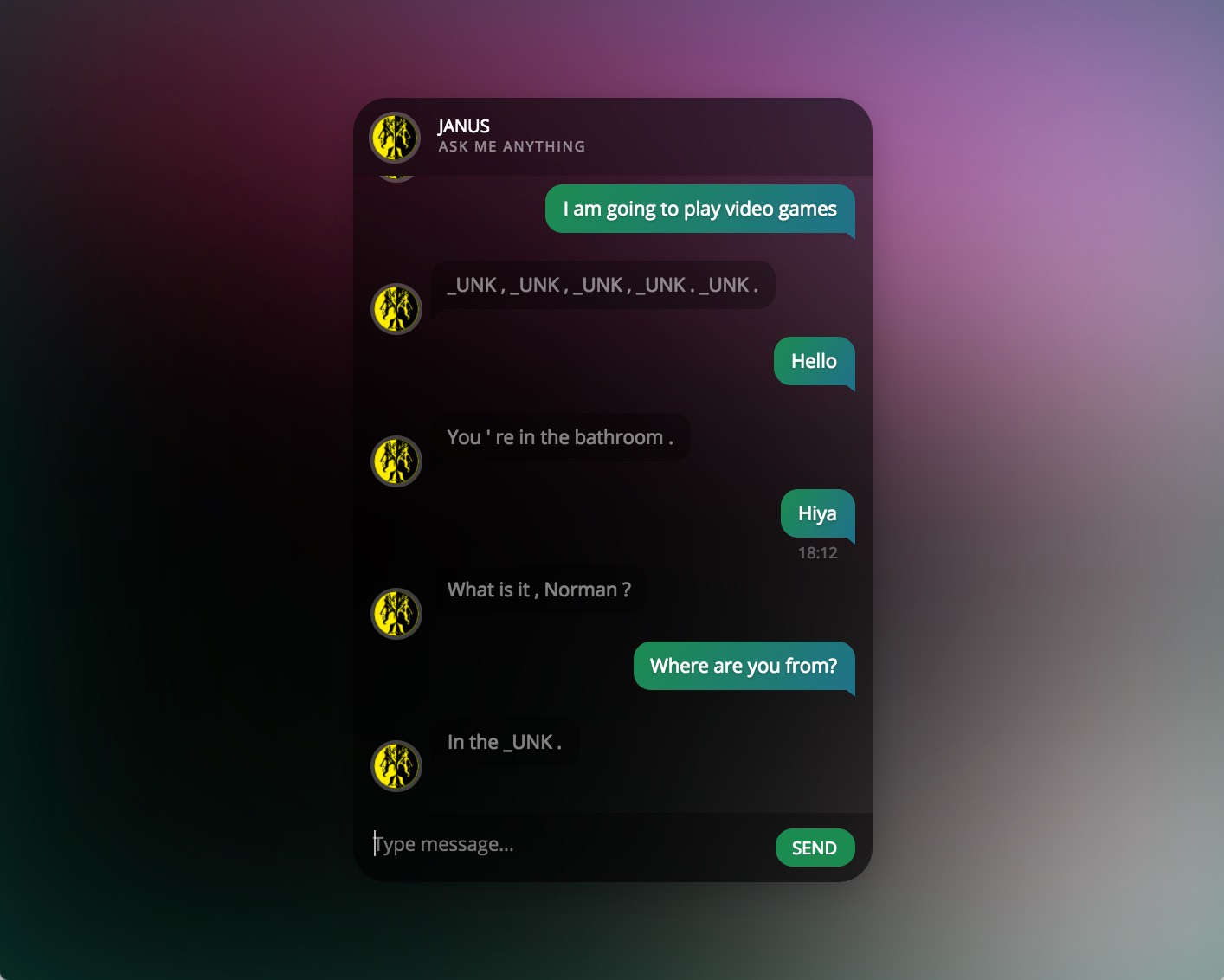

Providing an HTTP API for WeChaty

Fortunately, this repo has provided an HTTP server with flask. We can easily start it by executing PYTHONPATH=$(pwd) python ui/app.py . After that, we can see a very simple UI with this chatbot:

Note: the token _UNK is for special words such as names of video games and places.

As seen from ui/app.py, an API to chat with this bot has been defined:

@app.route('/message', methods=['POST'])

def reply():

return jsonify( { 'text': execute.decode_line(sess, model, enc_vocab, rev_dec_vocab, request.form['msg'] ) } )

We will take advantage of this API in WeChaty.

Set up WeChaty

# In our project directory:

npm init -y

npm install --save wechaty

Write a simple bot which send all the messages regardless those in groups to the AI server (flask) in last section:

// bot.js

const { Wechaty, MsgType } = require('wechaty');

const request = require('request');

const bot = Wechaty.instance({ profile: 'tensorflow' });

bot.on('message', (message) => {

if (!message.room() && !message.self() && message.type === MsgType.text) {

// If it is not a group message nor message posted by self

const content = message.content();

request.post({

url: 'http://localhost:5000/message',

form: { msg: content }

},

(err, httpResponse, body) => {

if (!err && body) {

const data = JSON.parse(body);

const response = data.text;

console.log('message:', content, 'response:', response);

message.say(response);

}

})

}

})

bot.init();

Run this script node bot.js, each time while receiving message, WeChaty will post it to the AI API (flask web server) [Message] <=> [WeChaty] <=> [AI] then get the response.

At present, we could implement a very basic chatbot with TensorFlow. The code can be found at: https://github.com/imWildCat/wechaty_and_tensorflow_chatbot

Limitation and summary

The chatbot is not so smart due to the insufficiency of data. In addition, it can only ‘understand’ English sentences instead of other languages. When comes to Chinese, it could be much more complex. The whole topic of Artificial Intelligence and Machine Learning is too large to be involved in this article. The aim of this blog is just to illustrate the potential at a glance instead of in detail.

Although there would be a number of challenges in the future, it is desirable to know more about Machine Learning, making your bot powered by Artificial Intelligence.

Bibliography

-

Siraj Raval, 2016. How to Make an Amazing Tensorflow Chatbot Easily. Retrieved from: https://www.youtube.com/watch?v=SJDEOWLHYVo ↩︎

-

Russell Jon, 2017. Google’s AlphaGo AI wins three-match series against the world’s best Go player. Retrived from: https://techcrunch.com/2017/05/24/alphago-beats-planets-best-human-go-player-ke-jie/ ↩︎